晚上和朋友出去吃饭,回来闲着,写点,东西。

前段时间遇到一个不错的东西,Locally linear embedding

(LLE),使用这种算法可以进行非线性降维,关键是其能够使降维后的数据保持原有拓扑结构。具体理论部分可以参考这个址web,这里只是简略对算法过程和实现做个简单的介绍。

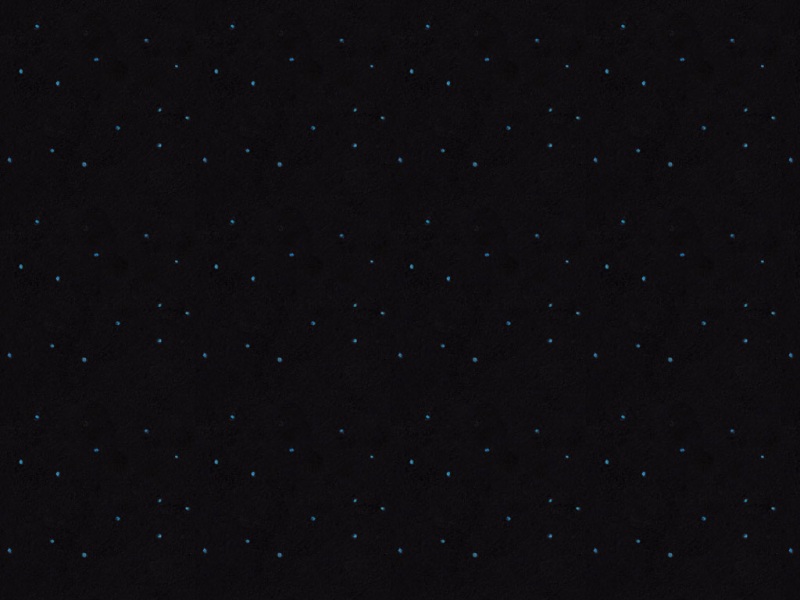

先给出一张下面算法得到的图

,图中第一幅为原始数据,第三个为降维后的数据,可以看出处理后的低维数据保持了原有的拓扑结构。

LLE算法可以归结为三步:

(1)寻找每个样本点的k个近邻点;

(2)由每个样本点的近邻点计算出该样本点的局部重建权值矩阵;

(3)由该样本点的局部重建权值矩阵和其近邻点计算出该样本点的输出值。

Matlab

LLE主函数:

% LLE ALGORITHM (usingK nearest

neighbors)

% [Y] = lle(X,K,dmax)

%

X:data

as D x N matrix (D = dimensionality, N =

#points)

%

K:number

of neighbors

%

dmax:max embedding

dimensionality

%

Y:embedding

as dmax x N matrix

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

function [Y] = lle(X,K,d)

[D,N] = size(X);

fprintf(1,'LLE running on %d points in %d

dimensionsn',N,D);

%% Step1: compute pairwise distances & find

neighbour

fprintf(1,'-->Finding %d nearest

neighbours.n',K);

X2 = sum(X.^2,1);

distance =

repmat(X2,N,1)+repmat(X2',1,N)-2*X'*X;

[sorted,index] = sort(distance);

neighborhood = index(2:(1+K),:);

% Step2: solve for recinstruction

weights

fprintf(1,'-->Solving for reconstruction

weights.n');

if(K>D)

fprintf(1,'[note:

K>D; regularization will be used]n');

tol=1e-3; %

regularlizer in case constrained fits are ill

conditioned

else

tol=0;

end

W = zeros(K,N);

for ii=1:N

z =

X(:,neighborhood(:,ii))-repmat(X(:,ii),1,K); % shift ith pt to

origin

C =

z'*z;%

local covariance

C =

C +

eye(K,K)*tol*trace(C);%

regularlization (K>D)

W(:,ii)

=

Cones(K,1);%

solve Cw=1

W(:,ii)

=

W(:,ii)/sum(W(:,ii));%

enforce sum(w)=1

end;

% Step 3: compute embedding from eigenvects of cost

matrix M=(I-W)'(I-W)

fprintf(1,'-->Computing

embedding.n');

% M=eye(N,N); % use a sparse matrix with storage for 4KN

nonzero elements

M =

sparse(1:N,1:N,ones(1,N),N,N,4*K*N);

for ii=1:N

w =

W(:,ii);

jj

= neighborhood(:,ii);

M(ii,jj)

= M(ii,jj) - w';

M(jj,ii)

= M(jj,ii) - w;

M(jj,jj)

= M(jj,jj) + w*w';

end;

% calculation of embedding

options.disp = 0;

options.isreal = 1;

options.issym = 1;

[Y,eigenvals] = eigs(M,d+1,0,options);

Y = Y(:,2:d+1)'*sqrt(N); % bottom evect is [1,1,1,1...]

with eval 0

fprintf(1,'Done.n');

%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%%

% other possible regularizers for

K>D

%C

= C +

tol*diag(diag(C));%

regularlization

%C

= C +

eye(K,K)*tol*trace(C)*K;%

regularlization

测试用例(瑞士卷,貌似挺好吃的):

clear all,clc

N = 2000;

K = 12;

d = 2;

% Plot true manfold

tt0 = (3*pi/2)*(1+2*[0:0.02:1]); hh =

[0:0.125:1]*30;

xx=

(tt0.*cos(tt0))'*ones(size(hh));

yy=

ones(size(tt0))'*hh;

zz=

(tt0.*sin(tt0))'*ones(size(hh));

cc=

tt0'*ones(size(hh));

subplot(1,3,1); cla;

surf(xx,yy,zz,cc);

view([12 20]); grid off; axis off; hold

on;

lnx=-5*[3,3,3;3,-4,3]; lny=[0,0,0;32,0,0];

lnz=-5*[3,3,3;3,3,-3];

lnh=line(lnx,lny,lnz);

set(lnh,'Color',[1,1,1],'LineWidth',2,'LineStyle','-','Clipping','off');

axis([-15,20,0,32,-15,15]);

%generate sample data

tt=

(3*pi/2)*(1+2*rand(1,N));

height = 21*rand(1,N);

X= [tt.*cos(tt);

height; tt.*sin(tt)];

%scatter plot of sampled data

subplot(1,3,2); cla;

scatter3(X(1,:),X(2,:),X(3,:),12,tt,'+');

view([12 20]); grid off; axis off; hold

on;

lnh=line(lnx,lny,lnz);

set(lnh,'Color',[1,1,1],'LineWidth',2,'LineStyle','-','Clipping','off');

axis([-15,20,0,32,-15,15]); drawnow;

%run LLE algorithm

Y=lle(X,K,d);

%scatterplot of embedding

subplot(1,3,3); cla;

scatter(Y(1,:),Y(2,:),12,tt,'+');

grid off;

set(gca,'XTick',[]);

set(gca,'YTick',[]);

另外还测试了一张S-CURVE,如下所示:

从上面这两张图可以看出,LLE算法是一种针对非线性数据的降维方法,处理后的低维数据均能够保持原有的拓扑关系。

现在LLE算法已经广泛应用于图像数据的分类与聚类、文字识别、多维数据的可视化、以及生物信息学等领域中。我目前所做的工作是基于Sparse

Representation的Super

Resolution,看的一篇有介绍使用这种算法进行数据降维的工作,后续有时间会继续写下去。

参考:

1、Roweis

S T, Saul L K. Nonlinear dimensionality reduction by locally linear

embedding[J]. Science, 2000, 290(5500):

2323-2326.

2、算法:http://www.cs.nyu.edu/~roweis/lle/code.html